Autor: Jarosław Jamka

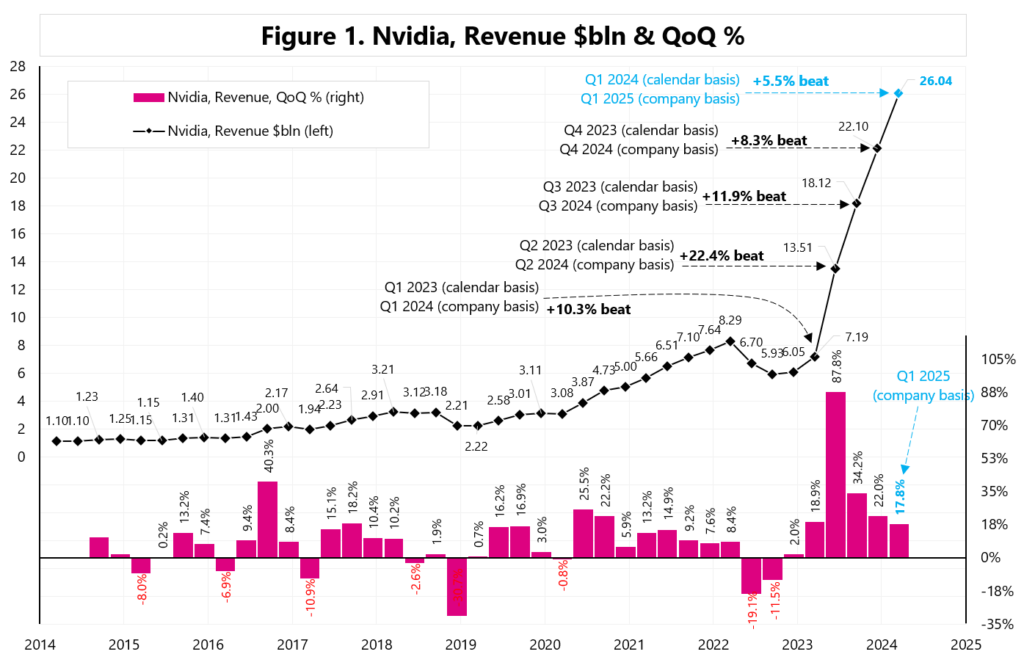

Nvidia once again beat earnings expectations in fine style (see Figure 1).

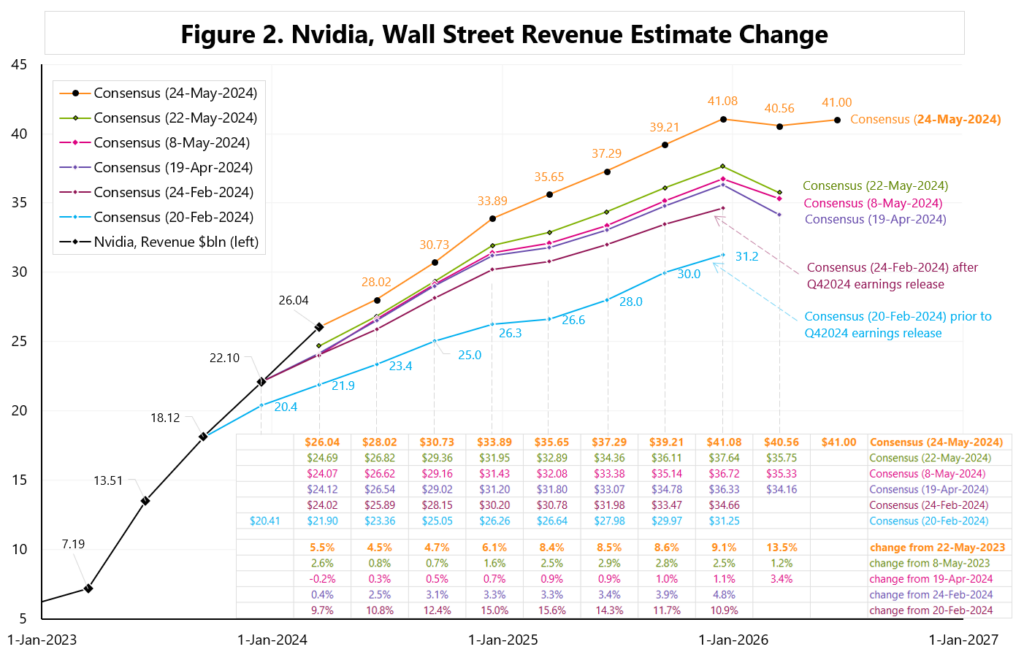

The current Wall Street consensus for future revenue, two days after the earnings release is up approximately 5-10% compared to the consensus immediately before the May 22 earnings release – see Figure 2.

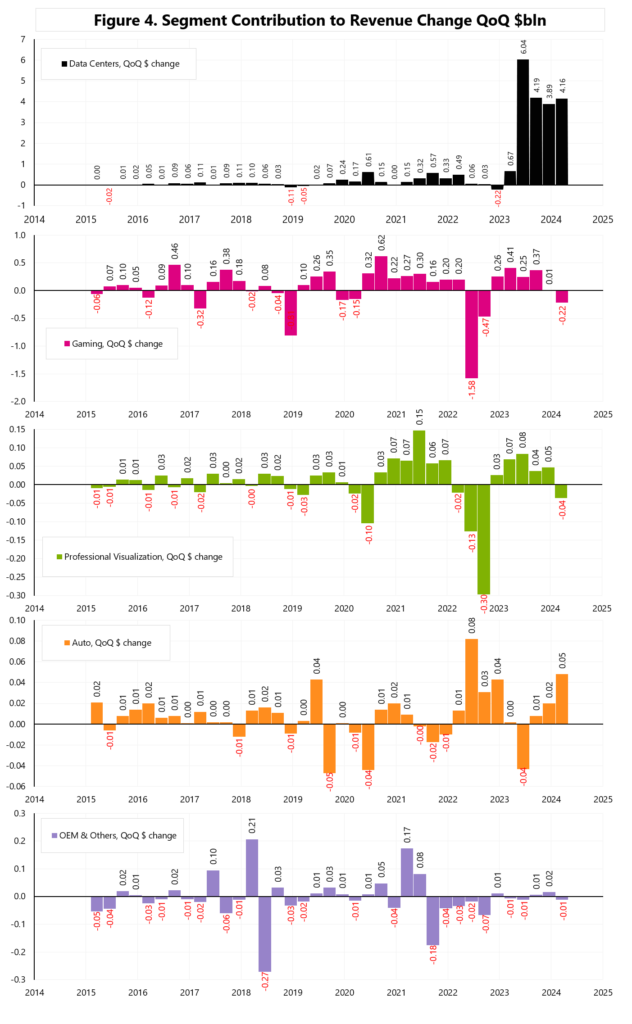

But the most important issue for investors remains the question of further revenue growth. According to Market Platform kind of revenues, the company’s sales are split in 5 segments, but as much as 87% of sales are from the Data Centers segment. Interestingly, Data Centers segment is responsible for 97% of YoY sales growth, and for 106% of quarter-to-quarter sales growth. See Figure 3 and 4.

Approximately 45% of sales in the Data Centers segment, or approximately $10.2 billion per quarter, are sales to 4 companies: Amazon, Alphabet, Meta and Microsoft (so-called hyperscalers).

CFO commentary:

“Data Center revenue of $22.6 billion was a record, up 23% sequentially and up 427% year-on-year, driven by continued strong demand for the NVIDIA Hopper GPU computing platform. (…) Strong sequential data center growth was driven by all customer types, led by enterprise and consumer internet companies. Large cloud providers continue to drive strong growth as they deploy and ramp NVIDIA AI infrastructure at scale and represented the mid-40s as a percentage of our Data Center revenue.”

In a sense, we are dealing with a virtuous cycle when more advanced AI models need more computing power, and only better models can win this race. In this respect, the product cycle only drives Nvidia’s sales when subsequent chip versions are even faster. The second trend is to expand the market beyond Hyperscalers, including Sovereign AI, but also other industries such as carmakers, biotechnology and health-care companies.

Colette Kress on more demand for AI compute:

„As generative AI makes its way into more consumer Internet applications, we expect to see continued growth opportunities as inference scales both with model complexity as well as with the number of users and number of queries per user, driving much more demand for AI compute. In our trailing four quarters, we estimate that inference drove about 40% of our Data Center revenue. Both training and inference are growing significantly.”

On Sovereign AI demand:

“Data Center revenue continues to diversify as countries around the world invest in Sovereign AI. Sovereign AI refers to a nation’s capabilities to produce artificial intelligence using its own infrastructure, data, workforce and business networks. Nations are building up domestic computing capacity through various models. Some are procuring and operating Sovereign AI clouds in collaboration with state-owned telecommunication providers or utilities. Others are sponsoring local cloud partners to provide a shared AI computing platform for public and private sector use.”

“we believe Sovereign AI revenue can approach the high single-digit billions this year. The importance of AI has caught the attention of every nation”.

Jensen Huang on demand on AI training and inference (Training is the process of teaching an AI model how to perform a given task. Inference is the AI model in action, producing predictions or conclusions without human intervention):

“Strong and accelerated demand — accelerating demand for generative AI training and inference on Hopper platform propels our Data Center growth. Training continues to scale as models learn to be multimodal, understanding text, speech, images, video and 3D and learn to reason and plan.”

“The demand for GPUs in all the data centers is incredible. We’re racing every single day. And the reason for that is because applications like ChatGPT and GPT-4o, and now it’s going to be multi-modality and Gemini and its ramp and Anthropic and all of the work that’s being done at all the CSPs are consuming every GPU that’s out there.”

On startups demand:

“There’s also a long line of generative AI startups, some 15,000, 20,000 startups that in all different fields from multimedia to digital characters”

“the demand, I think, is really, really high and it outstrips our supply. (…) Longer term, we’re completely redesigning how computers work. And this is a platform shift. Of course, it’s been compared to other platform shifts in the past. But time will clearly tell that this is much, much more profound than previous platform shifts. And the reason for that is because the computer is no longer an instruction-driven only computer. It’s an intention-understanding computer.”

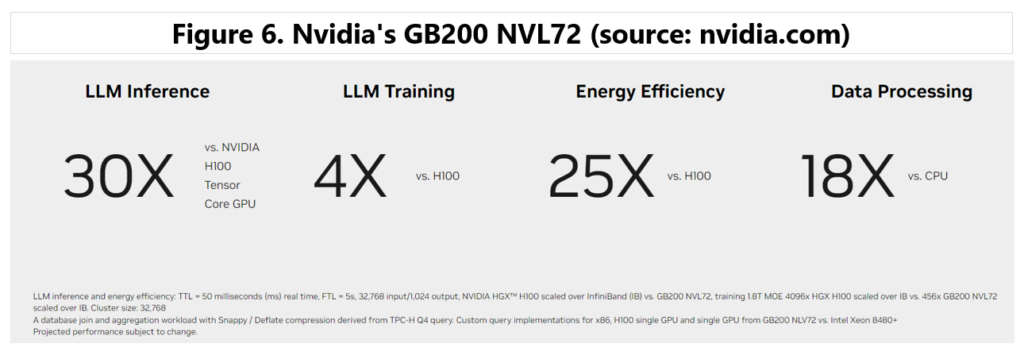

Nvidia is quickly introducing new faster products, for example in the field of Data Center GPUs: H100 Tensor Core GPU, then H200 Tensor Core GPU, and the next one is GB200 NVL72.

For example, Tesla has already purchased 35,000 H100s, Jensen Huang: „Enterprises drove strong sequential growth in Data Center this quarter. We supported Tesla’s expansion of their training AI cluster to 35,000 H100 GPUs. Their use of NVIDIA AI infrastructure paved the way for the breakthrough performance of FSD Version 12, their latest autonomous driving software based on Vision.”

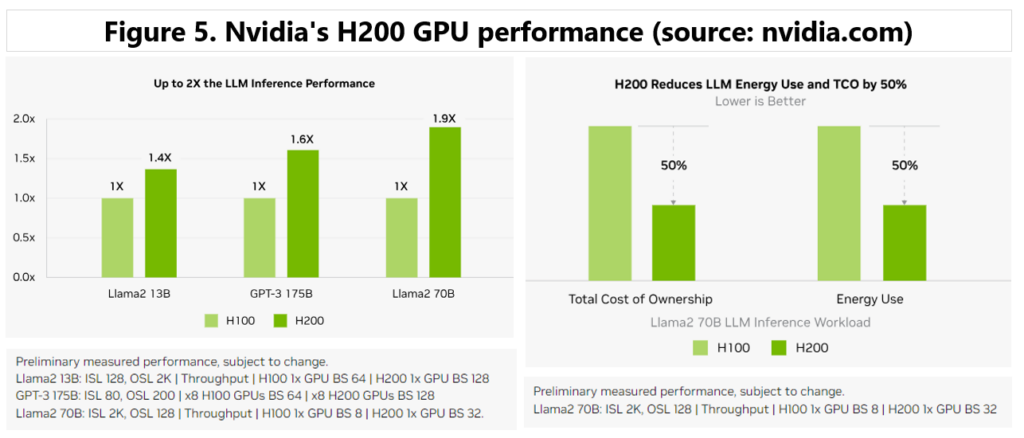

The H200 boosts inference speed by up to 2X compared to H100 GPUs when handling LLMs like Llama2. H200 reduces also TCO (total cost of ownership) by 50% and energy use by 50% – see Figure 5.

GB200 NVL72 delivers 30X faster real-time trillion-parameter LLM inference and 4X faster LLM training – see Figure 6.

Niniejszy materiał jest informacją reklamową. Ma charakter edukacyjno-informacyjny i stanowi wyraz własnych ocen, przemyśleń i opinii autora. Niniejszy materiał służy jedynie celom informacyjnym i nie stanowi oferty, w tym oferty w rozumieniu art. 66 oraz zaproszenia do zawarcia umowy w rozumieniu art. 71 ustawy z dnia 23 kwietnia 1964 r. – Kodeks cywilny (t.j. Dz. U. z 2020 r. poz. 1740, 2320), ani oferty publicznej w rozumieniu art. 3 ustawy z dnia 29 lipca 2005 r. o ofercie publicznej i warunkach wprowadzania instrumentów finansowych do zorganizowanego systemu obrotu oraz o spółkach publicznych (t.j. Dz. U. z 2022 r. poz. 2554, z 2023 r. poz. 825, 1723) czy też oferty publicznej w rozumieniu art 2 lit d) Rozporządzenia Parlamentu Europejskiego i Rady (UE) 2017/1129 z dnia 14 czerwca 2017 r. w sprawie prospektu, który ma być publikowany w związku z ofertą publiczną papierów wartościowych lub dopuszczeniem ich do obrotu na rynku regulowanym oraz uchylenia dyrektywy 2003/71/WE (Dz. Urz. UE L 168 z 30.06.2017, str. 12); Niniejszy materiał nie stanowi także rekomendacji, zaproszenia, ani usług doradztwa. prawnego, podatkowego, finansowego lub inwestycyjnego, związanego z inwestowaniem w jakiekolwiek papiery wartościowe. Materiał ten nie może stanowić podstawy do podjęcia decyzji o dokonaniu jakiejkolwiek inwestycji w papiery wartościowe czy instrumenty finansowe. Informacje zamieszczone w materiale nie stanowią rekomendacji w rozumieniu przepisów Rozporządzenia Parlamentu Europejskiego i Rady (UE) NR 596/2014 z dnia 16 kwietnia 2014 r. w sprawie nadużyć na rynku (rozporządzenie w sprawie nadużyć na rynku) oraz uchylające dyrektywę 2003/6/ WE Parlamentu Europejskiego i Rady i dyrektywy Komisji 2003/124/WE, 2003/125/WE i 2004/72/ WE. (Dz. U UE L 173/1 z dnia 12.06.20114). NDM S.A., nie ponosi odpowiedzialności za prawdziwość, rzetelność i kompletność oraz aktualność danych i informacji zamieszczonych w niniejszej prezentacji. NDM S.A. nie ponosi również jakiejkolwiek odpowiedzialności za szkody wynikające z wykorzystania niniejszego materiału, informacji i danych w nim zawartych. Zawartość materiału została przygotowana na podstawie opracowań sporządzonych zgodnie z najlepszą wiedzą NDM S.A. oraz przy wykorzystaniu informacji i danych publicznie dostępnych, chyba, że wyraźnie wskazano inne źródło pochodzenia danych.